Research Article | Open Access

|

Open Access

|

| Published Online: 27 December 2025

Responsible AI in the Age of Generative Media: A Comparative Study of

Ethical and Transparent AI Frameworks in India and the USA

| Published Online: 27 December 2025

Responsible AI in the Age of Generative Media: A Comparative Study of

Ethical and Transparent AI Frameworks in India and the USA

Bhavya S. Tripathi,1,* Shrawani C. Gaonkar1 and Anagha Dhavalikar2

1 Department of Artificial Intelligence and Data Science, Vasantdada Patil Pratishthan’s College of Engineering & Visual Arts, Mumbai, Maharashtra, 400022, India.

2 Department of Electronics and Computer Science, Vasantdada Patil Pratishthan’s College of Engineering & Visual Arts, Mumbai, Maharashtra, 400022, India.

*Email: bhavyatripathi02@gmail.com (B. S. Tripathi)

J. Collect. Sci. Sustain., 2025, 1(3), 25411 https://doi.org/10.64189/css.25411

Received: 13 November 2025; Revised: 25 December 2025; Accepted: 25 December 2025

Abstract

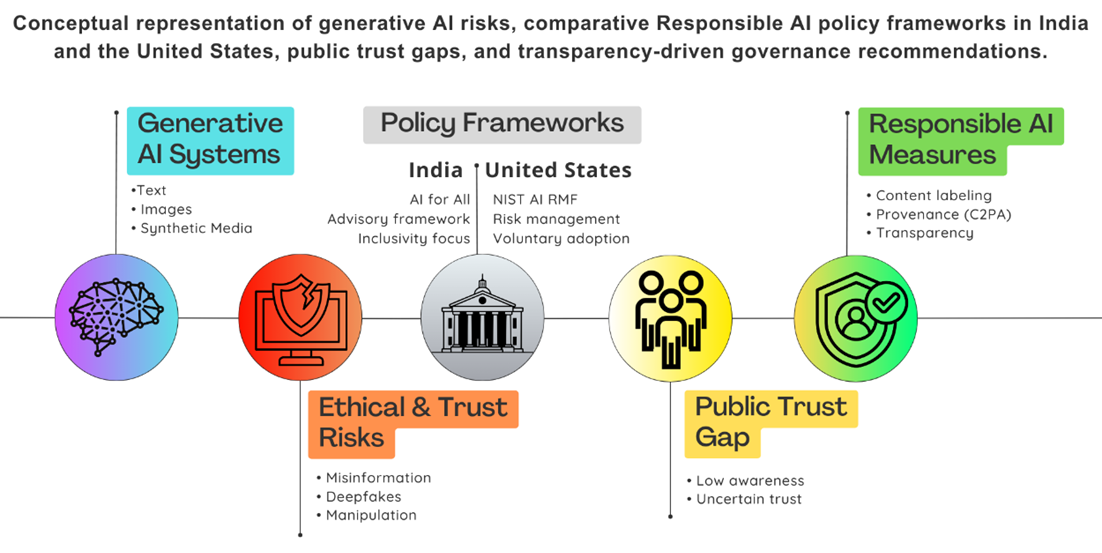

Advances in generative artificial intelligence, such as Google Gemini, ChatGPT and DALL·E, are opening new possibilities for creativity on digital media but also raising pressing concerns about misinformation, deepfakes and declining trust in media outlets. This paper explores Responsible Artificial Intelligence (RAI) efforts at the policy level in India and the United States, comparing their unique and shared approaches to addressing generative media - where policies are driven toward balancing innovation with transparency, accountability and fairness. India's official AI for All strategy speaks to inclusivity and social development, though concrete enforcement mechanisms and safeguards against generative media misuse are currently lacking. The US relies on the National Institute of Standards and Technology (NIST) AI Risk Management Framework, emphasizing risk assessment, technical robustness and accountability but lacks a robust regulatory mechanism that aligns its varying state and sector-specific initiatives. Alongside policy analysis, we designed and ran a pilot survey of college students and working professionals through September 2025 to capture awareness, trust and concerns related to Responsible AI. At a preliminary level, our findings showed low relative exposure to national policy frameworks on RAI but high expressed concern related to potential misuse of generative AI around deepfakes, manipulated images and the lack of checks on content authenticity. Respondents expressed particularly high endorsement for content labels that would mandate labeling artificial intelligence generated media. We conclude that India and the USA display parallel and diverging paths on RAI for generative media but both experiences are marked by a gap between policy aspirations and public understanding. Moving ahead, we see a need for greater policy clarity, cross-border coordination and public outreach to foster transparency and responsibility in adoption of AI for media.

Graphical Abstract

Novelty statement

This study uniquely integrates India–USA AI policy comparison with empirical survey evidence highlighting public trust and awareness gaps.